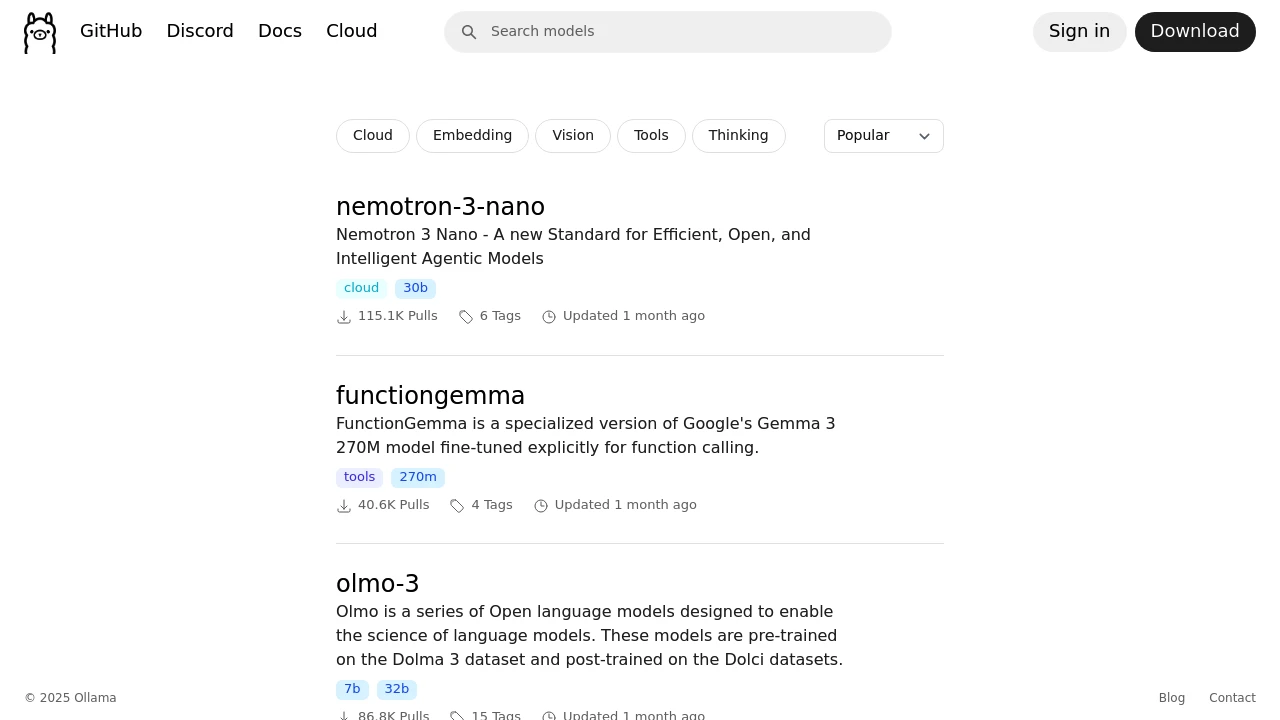

Ollama

Ollama makes running LLMs on your own machine as simple as a single command. No cloud, no API keys, no usage limits—just local AI.

Ollama has made local LLM deployment trivially easy. What once required deep technical knowledge now takes a single command: ollama run llama3. It's democratized access to AI for developers who want privacy, offline capability, or simply to experiment freely.

Key Features:

- One-command Setup - Download and run models instantly

- Model Library - Access to Llama, Mistral, Phi, Gemma, and dozens more

- Local API - OpenAI-compatible endpoint for integration

- Modelfile - Customize and create your own model configurations

Why run AI locally:

- Privacy - Your data never leaves your machine

- No Costs - No API fees, no usage limits

- Offline Access - Works without internet connection

- Experimentation - Try any model without signup or billing

Technical capabilities:

- GPU Acceleration - Automatic CUDA and Metal support

- Memory Management - Efficient handling of model loading

- Concurrent Models - Run multiple models simultaneously

- Custom Models - Import GGUF files and fine-tuned weights

Ollama is essential for AI development workflows. Use it for local testing before hitting production APIs, for privacy-sensitive applications, or simply to explore what's possible with open models. The experience is so smooth that local AI becomes the default for many tasks.