The fastest AI inference

Groq delivers AI inference at unprecedented speed using custom Language Processing Units (LPUs). When latency matters, nothing else comes close.

Frontier AI in your hands

Mistral AI builds efficient, open-weight language models that punch above their weight class. European AI leadership with a focus on openness and performance.

Google's most capable AI model

Gemini is Google's multimodal AI model family, offering state-of-the-art capabilities across text, code, images, and audio with industry-leading context windows.

The fastest way to build generative AI

Fireworks AI delivers blazing-fast inference for open-source and custom models. Optimized infrastructure that makes AI applications feel instant.

The AI cloud for open-source models

Together AI provides infrastructure for running, fine-tuning, and deploying open-source models. The platform for teams that want control over their AI stack.

A unified interface for LLMs

OpenRouter provides a single API to access models from OpenAI, Anthropic, Google, Meta, Mistral, and dozens of other providers. One integration, all the models.

The AI community building the future

Hugging Face is the platform for sharing machine learning models, datasets, and demos. Host models with Inference API or deploy to Spaces.

Run and fine-tune open-source models

Replicate lets you run open-source machine learning models with a cloud API. Access thousands of models for image generation, LLMs, and more.

Build with Claude

Anthropic's API gives you access to Claude, the AI assistant known for nuanced understanding and thoughtful responses. Features long context windows and tool use.

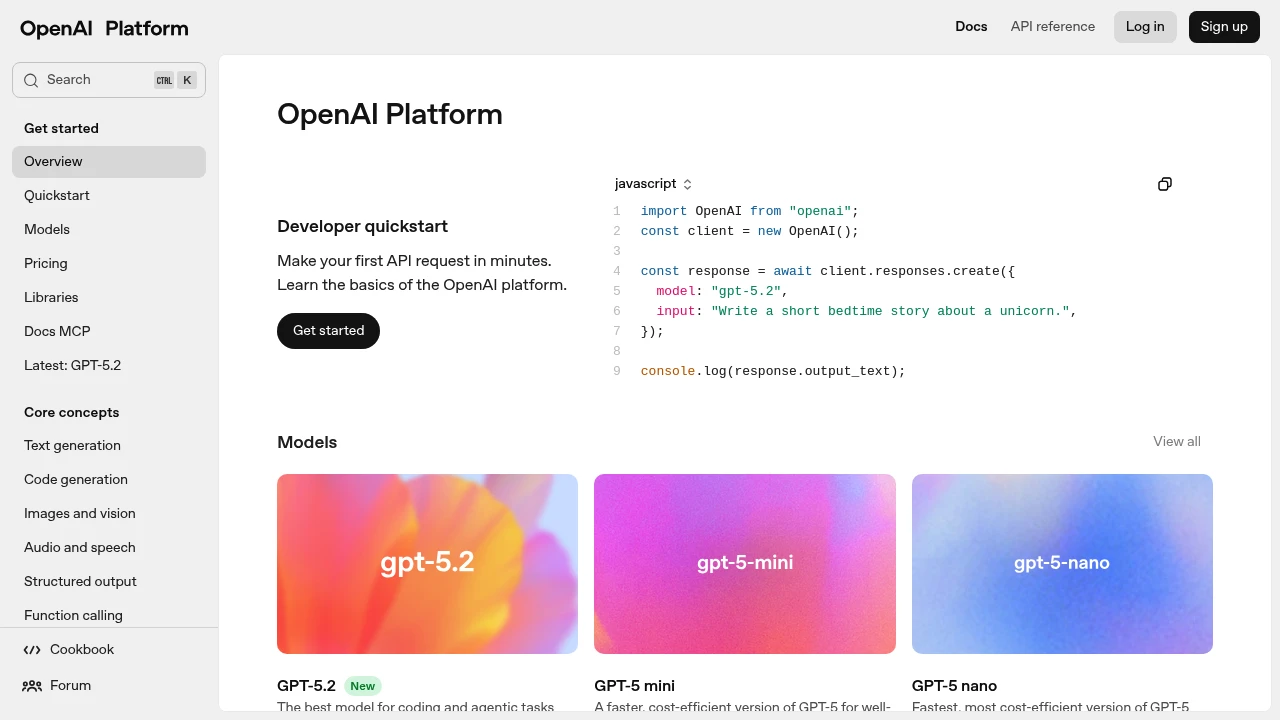

Build with GPT-4, DALL·E, and more

OpenAI API provides access to GPT-4, GPT-4 Turbo, DALL·E, Whisper, and embedding models. The foundation for countless AI applications.