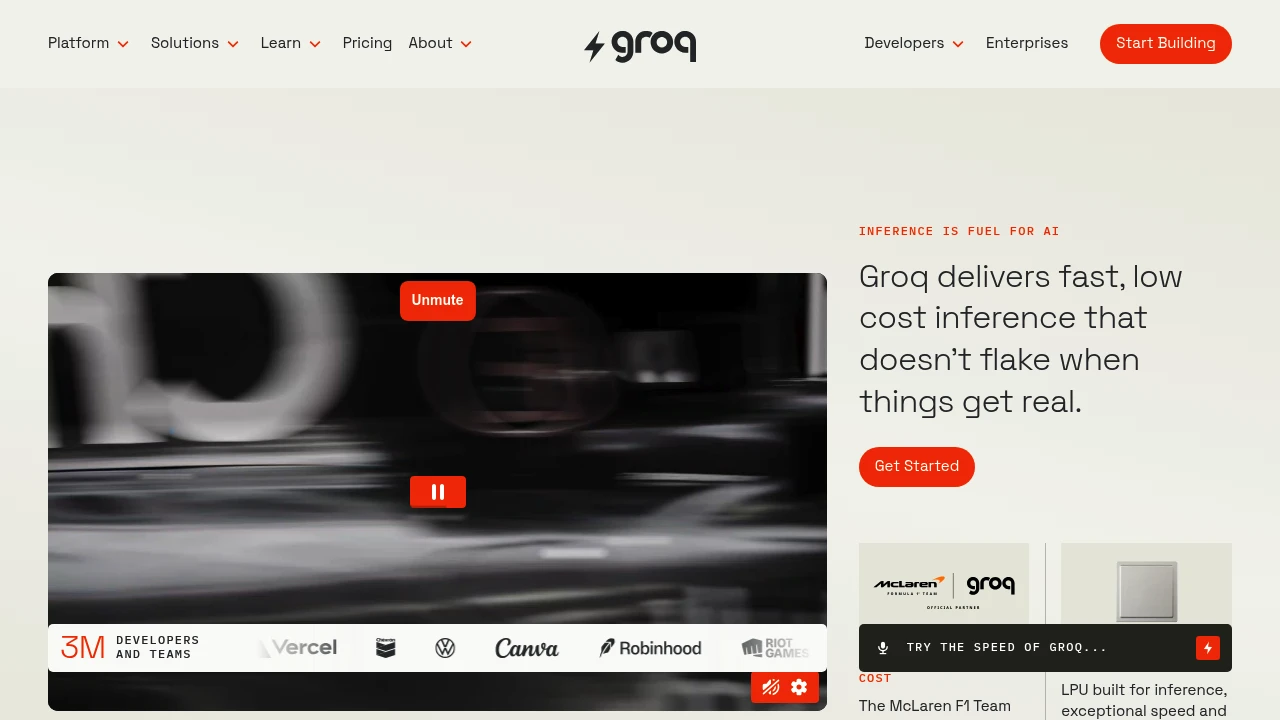

Groq

Groq delivers AI inference at unprecedented speed using custom Language Processing Units (LPUs). When latency matters, nothing else comes close.

Groq has redefined what's possible for AI inference speed. Their custom-designed Language Processing Units (LPUs) deliver responses so fast that the bottleneck shifts from AI to network latency. It's not incremental improvement—it's a different category of performance.

Key Features:

- Sub-second Responses - Thousands of tokens per second output

- Consistent Latency - Predictable performance without variability

- Open Model Support - Run Llama, Mixtral, and other open models

- Simple API - OpenAI-compatible interface for easy integration

Why Groq is different:

- Custom Silicon - Purpose-built LPUs, not repurposed GPUs

- Deterministic Performance - Same speed regardless of load

- Real-time Applications - Enable use cases that require instant responses

- Cost Efficiency - Speed often translates to lower total cost

Use cases where Groq excels:

- Interactive Applications - Chatbots that feel instant

- Voice Assistants - Low enough latency for natural conversation

- Code Completion - Suggestions that appear as you type

- High-volume Processing - Batch jobs that complete in minutes instead of hours

Groq proves that inference speed is a feature, not just an optimization. For applications where perceived latency affects user experience, or where processing time directly impacts cost, Groq's LPU architecture delivers capabilities no GPU-based solution can match.